The Pitfalls of Common Sense in Supply Chain Planning – When good is the enemy of great

This is a guest entry by Luciano de Moura, Managing Partner of UNISOMA – Analytical Planning Systems (an AIMMS implementation partner). Luciano has a Master’s Degree in Automation and Intelligent Systems from UNICAMP. The article below was originally published in the Brazilian magazine Mundo Logística.

There is no doubt that the first step towards good quality planning of the supply chain is to make decisions using common sense. But what if these decisions depend on hundreds or even thousands of variables and constraints? And what about cases where the process is so complex that potentially interesting alternatives remain unexplored? And what does this mean for the situations where the scale of production makes it such that even small changes to the plans translate into millions of dollars? This article demonstrates that relying less on common sense and instead basing planning decisions on the efficient use of analytical tools is the safest option to ensure the best results for the company.

Questioning Common Sense of Decisions

For almost 15 years, I have worked in consulting while providing support for complex planning in sectors such as steel, paper and pulp, livestock production, textiles, cement and consumer goods in both the Brazilian market and abroad. The methodology I have been advocating and implementing throughout my career is now recognized by the market, with optimism, under the banner of Business Analytics and may, superficially, be summarized as (i) Prescriptive Solutions, based on Mathematic Optimization, which automatically generate the planning suggestions, based on conforming to restrictions with the objective being to maximize value criteria for companies; (ii) Descriptive Solutions, based on the Discrete Event Simulation techniques, thereby anticipating the consequences of implementing one or more production or logistics policies into action; and (iii) Predictive Solutions, derived from statistical modeling (or “black box ” methods such as in Neural Networks), which has the ultimate goal to estimate important decision-making variables based on the historical occurrence of these variables and other related parameters.

Over time, computing platforms have been evolving at an unbelievable pace, which has allowed for increasingly larger and more complex planning scenarios to be handled through these techniques (Medeiros Milanez, 2010). I have also witnessed, with great satisfaction, how the market has developed an increasing understanding and ever more frequent utilization of these mathematical tools for business management. Truly, what only a few years ago was seen as far off innovation or merely academic speculation today permeates the discussions of leading managers.

Indeed, I have horrific memories of how difficult it used to be to close sales of analytical tools – simply known as Operational Research at the time, as it was necessary to provide an extensive in-depth proof of concept regarding the principles of the technique utilized and the results obtained for those who had ventured to use them. Regardless of the name given to these techniques and the maturity of the market in terms of the absorption of such tools, one characteristic that endures over time is the manner in which they bring about paradigm shifts in the planning process.

At first glance, the role of the decision maker, often associated with the generation of the plans (and considering the anxiety of the constant reprogramming present in some markets) gladly shifts towards scenario analysis, which is a more interesting activity from the corporations’ perspective, given his or her knowledge of the market conditions and the Business itself and of course, the justified limitation on human resources in attempting to simultaneously assimilate thousands of variables and constraints which add a combinatorial characteristic to the difficult planning activity.

Secondarily, they promote a significant gain in corporate governance by allowing the systematization of knowledge that is eventually placed into spreadsheets and into the heads of the heroic decision-makers. It is not difficult to see that the correct implementation of best practices in planning through the use of analytical tools guarantees the sharing of knowledge within the company, while minimizing the impact generated by the turnover of trained personnel. Another significant aspect is the development of the empirical model of planning brought about by the tools of this nature, based only on the viability of operations for the maximization of meeting the established criteria (optimized model).

The ability to generate multiple feasible solutions, in time for the right decisions to be made, allows for the choice of a solution committed to the planning to be done based on the important KPIs for the company, which goes far beyond finding feasible solutions from the operational point of view. But undoubtedly, the key point for this change is the manner in which these solutions generated by these tools question the common sense of the decision makers and put in check false truths that propagate over time and become myopic for corporate decisions. It is exactly this evolution which this text is based on. My main point is to illustrate how the quantitative approach of the analytical tools ensures the profitable results that often escape the more simplistic planning rules. To this end, I use as a basis in the remainder of this article real cases that I have experienced in the market to date.

Concerns in the Production of Disassembly

A crucial example that I cite in all my business presentations is the consumption of feed in the livestock production industry, more specifically in the poultry sector. Responsible for over 70% of the costs of this supply chain (Embrapa, 2013), the administration of the animal feed for the housed flocks, for subsequent supply to the slaughterhouses, beholds the feed conversion index (ratio of the average feed consumption of the animal in a given period of time, with the proportionate weight gain), as the most utilized pillar in the slaughter decisions. At the majority of the poultry companies that I visited, the statistical curves related to feed consumption showed a clear difference in the feed conversion of sexed lots. Batches of females were significantly less efficient than males under the same conditions, i.e., the females had a reduced rate of weight gain, for the same amount of feed, at a much earlier age than the males. Based on this behavior, the decision-makers had as an absolute truth the need to systematically slaughter the lots of females before the lots of males, as a means of reducing costs. In fact, this decision makes sense from a nutritional point of view, while being focused only on the promotion of poultry.

In contrast, when treating the chain as a whole, this decision may force the company to overlook more lucrative options. The chain of livestock production is naturally complex because of the characteristic of the disassembly of the raw material. Thus, for example, to produce breasts in-natura, valued by the market at a certain time, it is necessary to identity a destination for the thighs, gizzards and neck. This is true, without considering the additional difficulties imposed by the quality constraints, by the income variables, by the constant closing of the external markets, by the very short shelf lives and by the limitations of the industrial production.

In this context, basing the choice of slaughter of the lots only on the conversion rate may represent considerable financial losses in the supply chain. The perceived complexity in the planning process of this chain has forced poultry production companies to invest very early on in mathematical models to support their decisions. Undoubtedly, the most significant case in this line, which now has more than two decades of successful implementation, was that of Sadia, the clear leader of the Brazilian poultry market at the time, which demonstrated great trends in innovation. In order to realize financial gains in the order of USD $50 million in the first three years of implementation (Taube-Netto, 1996), the company invested in a framework of computational intelligence comprised of optimization models and statistical prediction.

The optimization module (Taube-Netto, 1997) made it possible to integrate the decisions involving meeting demand, the multi-plant production and the determining of the most appropriate slaughter profiles for each planning scenario. The ability to simultaneously interact with the various points of decision made it possible for changes in the dynamics of the markets, in the production capacities and resources and in the sudden changes in the characteristics of the housed flocks to be immediately reflected in the other links of the chain. This as a result expanded the horizon of visibility, helping to anticipate problems and above all, allowing for reactions in the planning as agile as the changes in the chain which made them necessary.

The statistical models, in turn, based on indicators of poultry industry (mortality, growth, quality of the farmer, etc.), over time increased the predictability of the conditions of the lots of chickens developing in the field and ensured the quality needed for the information utilized in constructing the planning scenarios. Considering that the company was responsible for the slaughter of more than 1 million chickens per day at its units in Brazil, imagine the huge initial discomfort caused in the body of decision makers in the early 90’s, when this tool quantitatively demonstrated that suspending the slaughter in some of these units for a day would bring significant financial gains.

However absurd and counterproductive that this appeared to be from the perspective of the decision makers, the forced stoppage allowed the lots to reach the ideal weight conditions and quality profiles required to produce the most profitable mix of SKUs in meeting the demand from that period of time.

Mistakes in the Utilization of Bottlenecks

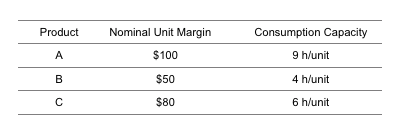

Another example, notable for its recurrence, is the consumption of limited capacity of bottlenecked resources following a deceptive ordering by the nominal margin of the products that compete for the respective resources. In the jargon of the Mathematical Optimization professionals, this way of coordinating the use of limited resources is called “Greedy Technique” and is known for being based on the choices that bring about the best immediate results, but provide low quality for the overall result. For a simplistic but effective graphic, consider a productive resource with finite availability of 1000 hours in any period. The production criterion according to the ordering based on the nominal margin would involve the production of 111 units of a single product A, if the nominal unit margin and consumption capacity were given as shown below.

This is a didactic example, and the reader may have already noticed that by ordering the use of the resource by the ratio between the nominal margin and consumption capacity of each product it would already generate an optimized production plan (1 unit of product B and 166 units of product C), but the actual planning scenarios involve thousands of variables and constraints and, in these situations, it is a virtually impossible task to derive rules that generate solutions with a quality equivalent to that of analytical tools. A major pitfall in the strategy of ordering products by the nominal margin is the fact that, in some situations, the products on the list are those that utilize the availability of bottlenecked resources in a prohibitive manner. Often, a product mix with lower nominal margins is more profitable for the company because it utilizes the scarce resources at a lower intensity.

This is a didactic example, and the reader may have already noticed that by ordering the use of the resource by the ratio between the nominal margin and consumption capacity of each product it would already generate an optimized production plan (1 unit of product B and 166 units of product C), but the actual planning scenarios involve thousands of variables and constraints and, in these situations, it is a virtually impossible task to derive rules that generate solutions with a quality equivalent to that of analytical tools. A major pitfall in the strategy of ordering products by the nominal margin is the fact that, in some situations, the products on the list are those that utilize the availability of bottlenecked resources in a prohibitive manner. Often, a product mix with lower nominal margins is more profitable for the company because it utilizes the scarce resources at a lower intensity.

Surprises in the Analysis of Logistics Networks

The large public works which took shape in Brazil as a result of the Growth Acceleration Plan (PAC – Planos de Aceleração de Crescimento) released by the Federal Government, as well as the upcoming World Cup in 2014 and Olympic Games in 2016, generated obvious impacts on the growth strategies and market coverage of the cement companies in the country. As the profile of market coverage of the companies in this sector involves operations throughout the country, analyses of the expansion of the logistics network were based on the discretization of the territory into mesoregions. Structural changes in a mesoregion, whether it be due to the expansion of capacity at existing plants, the acquisition of new assets or as a result of the divestiture of a part of the network, is quickly reflected in the remainder of the network, generating the need for adjustments in the national operations as a whole.

Thus, as an illustration, the implementation of a new production plant in a given mesoregion enables the direct supply to a certain market that was previously performed by another or by a combination of others. In direct consequence, these other plants begin serving other markets and the effect propagates throughout the distribution network. It takes little effort to verify that the same ripple effect also occurs in inbound logistics, in the association and development of suppliers, in the right mix of product per plant, etc. Repeatedly during discussions of pre-sales analysis of logistics networks involving the use of analytical tools, I encountered heated debates regarding the simplicity of the decisions to be made. Underestimating the complexity of this type of challenge is common and frequent, but poses a risk to adherence and to the quality of decisions that, as a rule, involve significant investment. The intricate tax regulations which affect interstate transport make it so that the obvious decision to associate the support for a given market with the closest production plants, as a measure to minimize freight costs, gets derailed. The variety of incentives, taxes and fees of our tax laws “deform” the map of the country and require that the analyses of investments in logistics networks simultaneously consider the logistics costs and taxation, creating a major challenge for the policy makers.

I lost count of how many times I witnessed the discomfort of some decision makers in this industry, accustomed to traditional rules and decision tools, when faced with the extremely lucrative expansion plans that would generate quite controversial market support solutions such as, for example, to have a productive plant and an active market in the same mesoregion and have the demand be met by a plant located in the neighboring state. This effect is common in analyses conducted in light of the trade-off between logistics costs, production costs and the taxes levied on operations. It is exactly this balance between the criteria that provides the conditions for the logistics network decisions that is the fundamental point of performance of the analytical tools for supporting the decision.

More recently, I participated in the generation of equally controversial results of a study for the revision of a logistics network of a large textile plant in the Southern Region of Brazil. With manufacturing operations also in North/Northeast Brazil, the company envisioned rapid growth in the market for the next 5 years and needed to adjust its logistics structure during that period in order to maintain adherence to the expansion plans and to ensure the obtainment of the forecasted results. This translated into the needs analysis and the ideal location of one or more distribution centers in Brazil, considering the current structure and the expected future growth, that enabled the replenishment of inventory and distribution to numerous customers, reducing operating costs while simultaneously maintaining market coverage at very aggressive levels.

The challenge extended to the determination of the volumetrics of the inventory required and the most suitable legal structure for these new centers. To illustrate the complexity of these analyses, by combining the logistics variables (freight costs, transit times, distribution of the plants and markets, picking versus cross-docking, etc.), the tax variables (tax incentives, VAT, tax structure of subsidiaries, of the warehouse or third-parties, etc.) and the operating variables (production and storage capabilities, outsourcing, etc.); the scenarios reached more than 60,000 variables. It did not surprise me that the analytical tool used as a basis for analysis, displayed expansion scenarios for the network with a cost reduction of over R$ 7 million annually, when comparing with the current physical structure and the current operating model.

However, it took a significant amount of additional hours with robustness testing of the results in order to substantiate the by no means trivial idea that the first distribution center in the plan should be implemented in the same state of the manufacturing plant which now accounts for over 90% of the distributions to the market. Similar to the case of cement, this result is a consequence of the appropriate treatment of the challenge of balancing all costs of the chain, from the procurement to the distribution logistics, including the consideration of the tax burden.

Final Common Sense Considerations

It is not my goal nor is the scope of this article to devalue common sense as the basis of the planning processes of the Supply Chain. There is no doubt that this is what supports the making of assertive decisions. However, it is necessary to have an awareness of the complexity of the planning process, generated by the usual combinatorial nature of the decisions and by the frequent interconnection between immeasurable variables and conflicting constraints, which hinders and even prevents the identification of the most interesting action scenarios. The cases reported in this article illustrate, in a variety of sectors and at the different decision levels, how trivial solutions and seemingly more sensible alternatives can be misleading and lead to action plans that depart from what would be, in fact, profitable for the company. Having the cases as a reference, the support of analytical tools in the prescription of the planning and in the treatment of the complexities of the decision-making processes brought about, as a rule, significant direct results.

However, more importantly, it allowed the decision-makers to critically simulate different planning scenarios, a previously unviable activity because of the need to use all available time for the mere construction of the plans. It is on this critical action that the common sense of the decision-makers should be focused.

References

- Embrapa, “Custo de produção de frangos de corte cai 2,80% em julho [Cost of broiler chicken production drops 2.80% in July]”, report published on the website http://www.cnpsa.embrapa.br/?ids=&idn=1235, 28/08/2013.

- Medeiros Milanez, Eduardo, “25 years of O.R. in Brazil”, OR/MS Today, (April) 2010.

- Taube-Netto, Miguel, “Integrated Planning for Poultry Production at Sadia”, Interfaces, Vol. 26, pp. 38-53, (January/February) 1996.

- Taube-Netto, Miguel, “Tecnologia das Decisões – Novo Paradigma [Decision Technology – New Paradigm]”, Revista Agrosoft, Vol. 2, pp. 20-25, (June/July) 1997.

Get a roundup of our best supply chain content every month in your inbox! Sign up for our blog digest here.